Last week I came across a miscategorized eBay listing for an Electronic Development Corp (EDC, now owned by Krohn-Hite) 521 DC Voltage/Current Source. It was listed in the network equipment section, with “Juniper” as the manufacturer.

The EDC 521 is a precision DC reference source with high accuracy, precision and stability, for the calibration of meters and sensors. It can output voltage in three ranges, (0-100mv, 0-10v, and 0-100v), and constant current in two ranges, 10mA and 100mA (with compliance voltages up to 100V). In each range, the precision/resolution of adjustment is 1ppm. Overall stability in Voltage mode, within the devices operating temperature range is 7.5ppm over 8 hours, 10ppm over 24 hours, 15ppm over 90 days, and 20ppm over a year. The temperature coefficient (which is included in the above estimates). It is microprocessor controlled and has a GPIB interface to allow remote control.

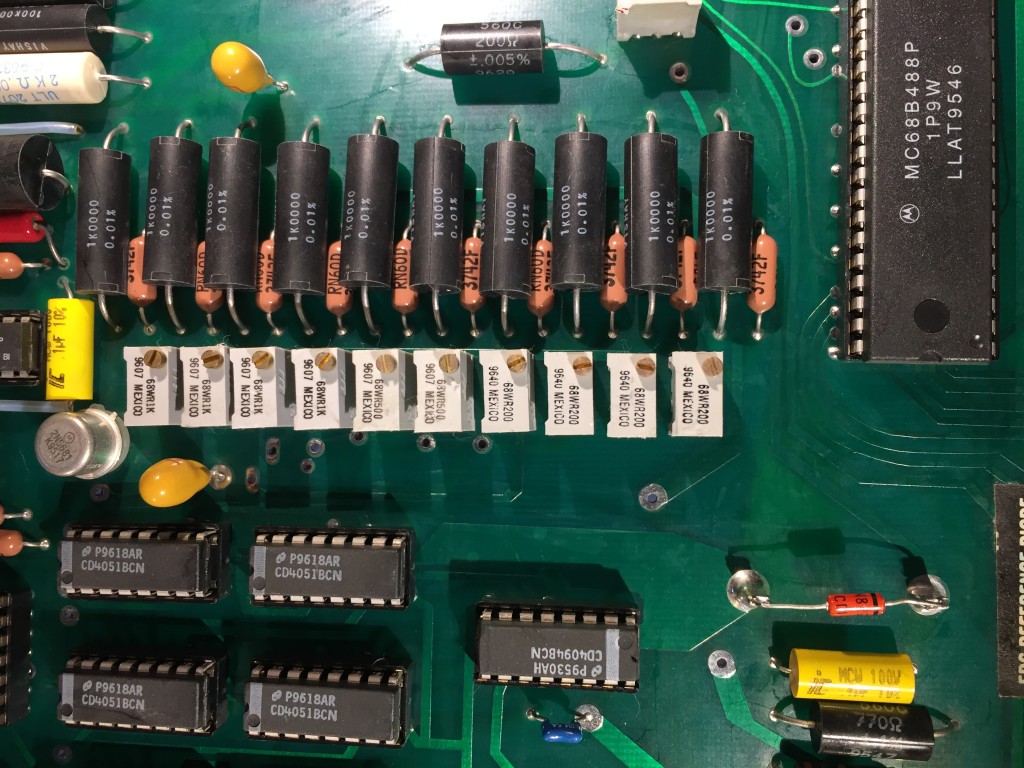

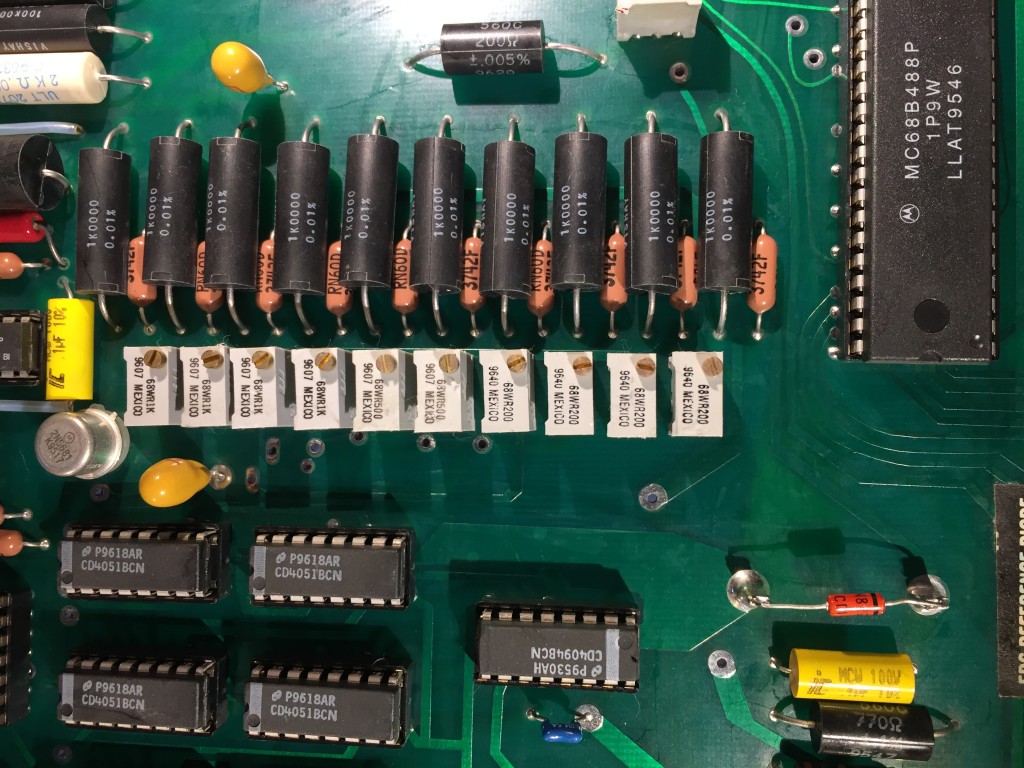

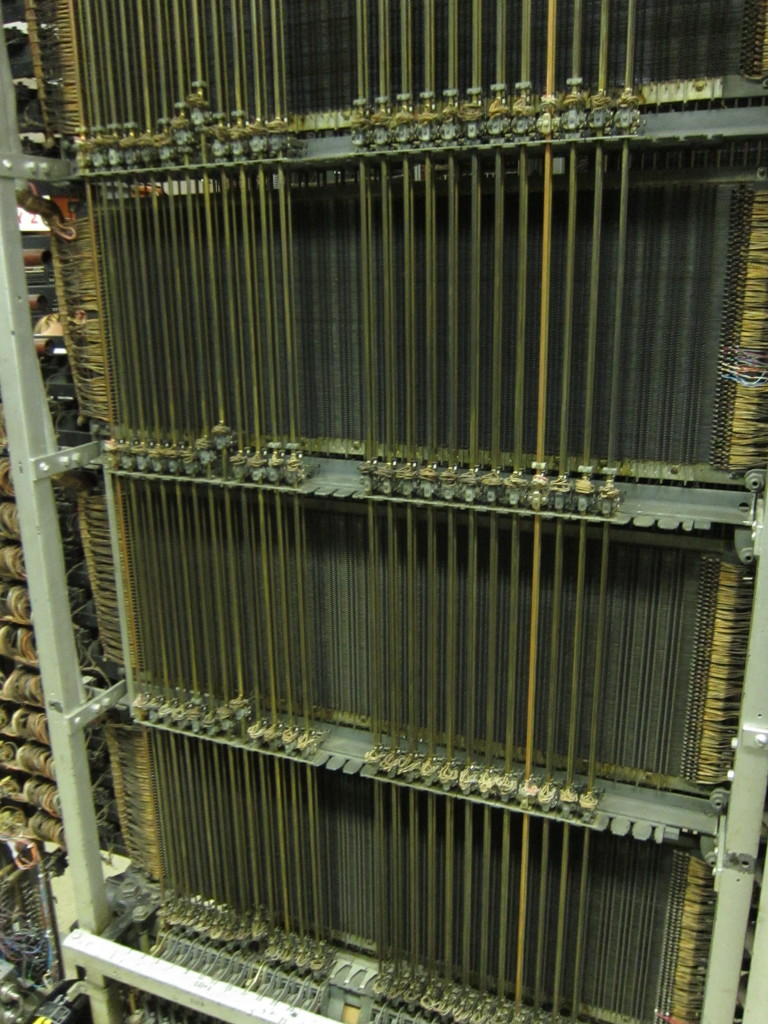

To achieve its basic stability, it uses an aged and selected 1N829 temperature compensated Zener diode as its primary voltage reference. This diode is driven by a stable precision current source at a current chosen to provide the best combination of temperature stability, long-term drift and low-noise for the individual diode used in each unit. Adjustments are made using a custom, precision 24-bit digital to analog converter.

The DAC works by feeding the reference voltage across a resistor divider to obtain 10 output voltages, tapped at 500mV intervals. If I understand correctly, these voltages are switched to provide analog voltages for each decade, these voltages are buffered, then then weighted and summed using some precision resistors before being fed to the output amplifier.

When the package arrived yesterday, I saw why the listing had been miscategorized — it was packed in a box for a Juniper Networks switch. That, and the sticker noting a failed calibration attempt in 2009 makes me doubt the seller’s assertion that it was “pulled from a working environment.” Not that I expected a pristine, calibrated instrument for $150.

Inside the box, I found things in a bit worse physical shape than I expected. What I thought was shadow/glare in the photo from the ebay listing, was actually a torn red filter over the LED display. And the underside of the case, which wasn’t pictured in the listing, had a huge dent.

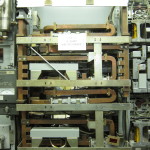

On closer inspection, the dent didn’t reach the PCB inside, and I was able to remove the panel and hammer it out. Once inside, I found that everything had a fine coating of persistent dust. Hitting it with canned air shook some of it loose, but most of it remained.

So, I got to work rinsing it with a lot of isopropyl alcohol which I then chased off the edge of the board with canned air. After a few repetitions, the top and bottom side of the board were pretty clean. I then looked over both sides of the board closely, looking for damaged components, and cleaning out little pockets of residue.

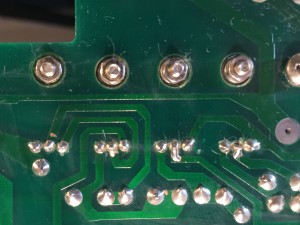

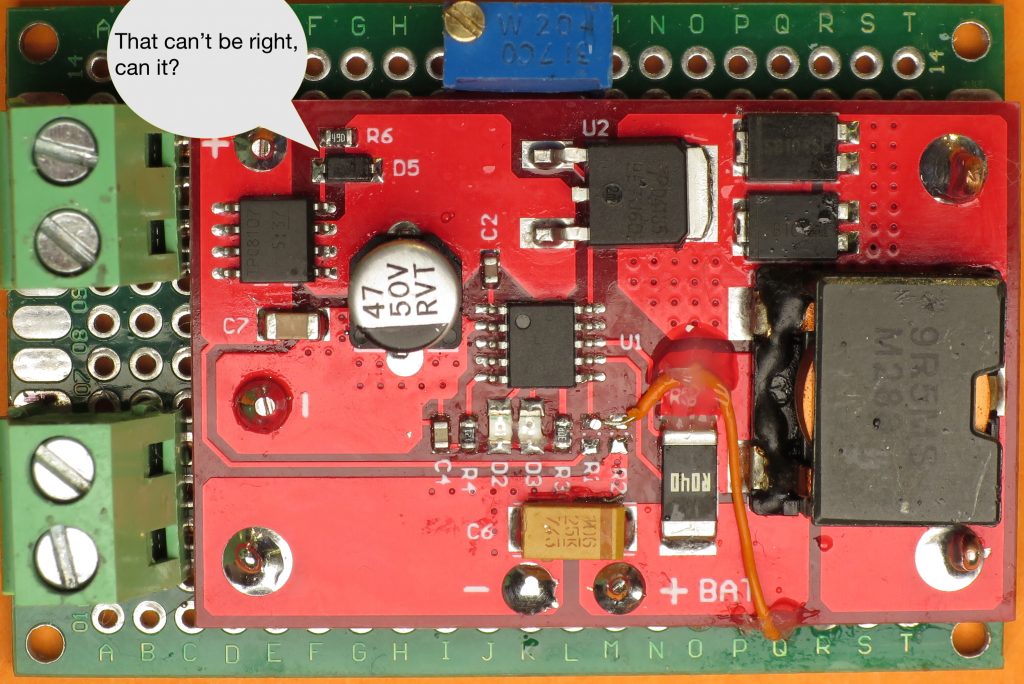

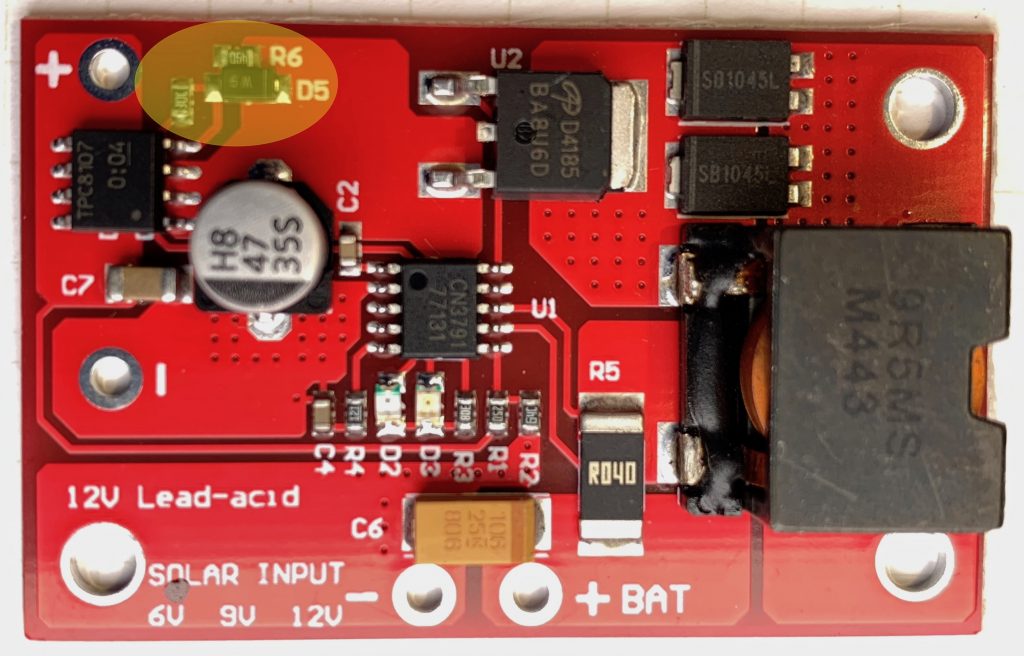

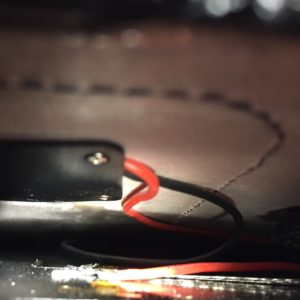

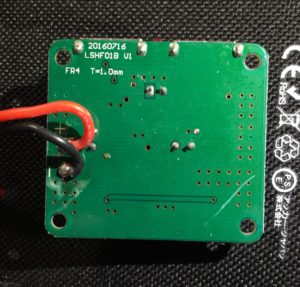

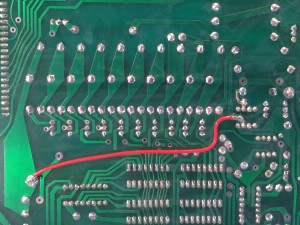

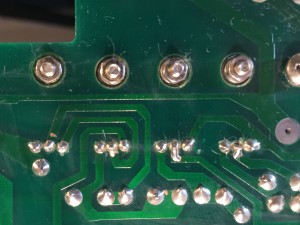

I didn’t see any damaged components, but along the way noticed signs that the board had received some major revisions. There was an obvious bodge wire on the bottom of the PCB, but it was also clear that new holes had been drilled to receive additional components. On the top side, I found a cut trace, along with a couple of added resistors and a couple of capacitors. I haven’t traced everything out, but its obvious that the bodge wire connects to one end of the internal reference divider, and the rest of it is on the opposite end, so it would seem likely that its helping isolate the reference divider, and the voltages it produces, from noise sources.

-

-

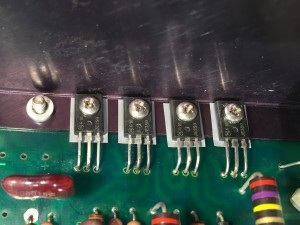

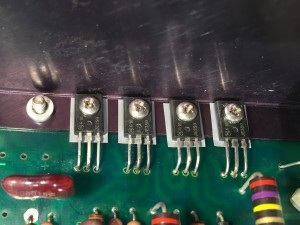

It also appears that a number of power transistors have been replaced. Unfortunately, none of the components in question have obvious date codes, so its hard to guess when the modifications were done, and whether the transistors and the filters were added at the same time. Perhaps one of you knows how to decode the markings? First line is a Motorola logo followed by “616,” the next line is “JE350,” which is the model/part number. The datecodes on other components pretty much all date to late 1996, and the MPU board has a label with the firmware revision and is dated January 1997.

Before closing it up, I took care of the loose plastic supports for the back-edge of the PCB, which holds heavy electrolytic filter caps for the power supply. I cleaned the old, crusty, failed double-sided foam tape off and replaced it with new tape so I could stick the supports to the back of the chassis again.

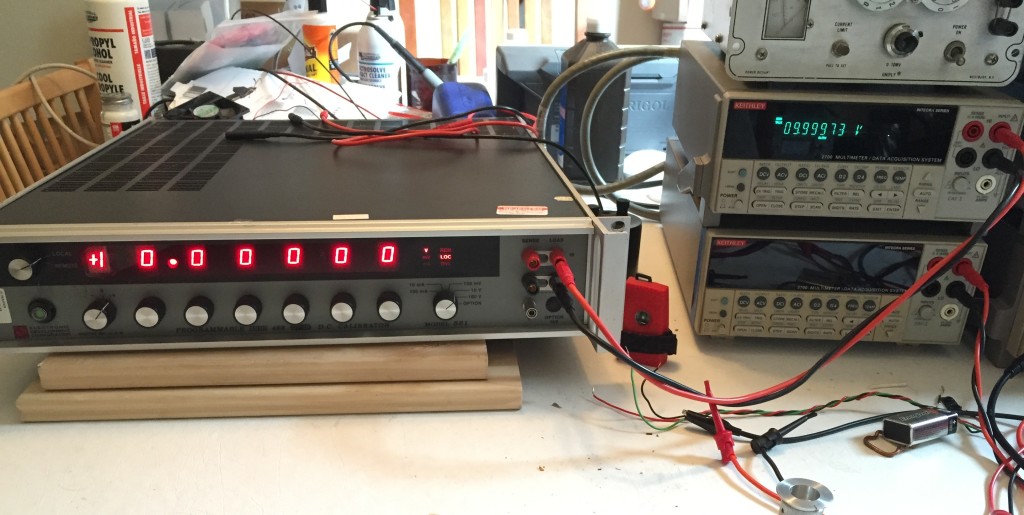

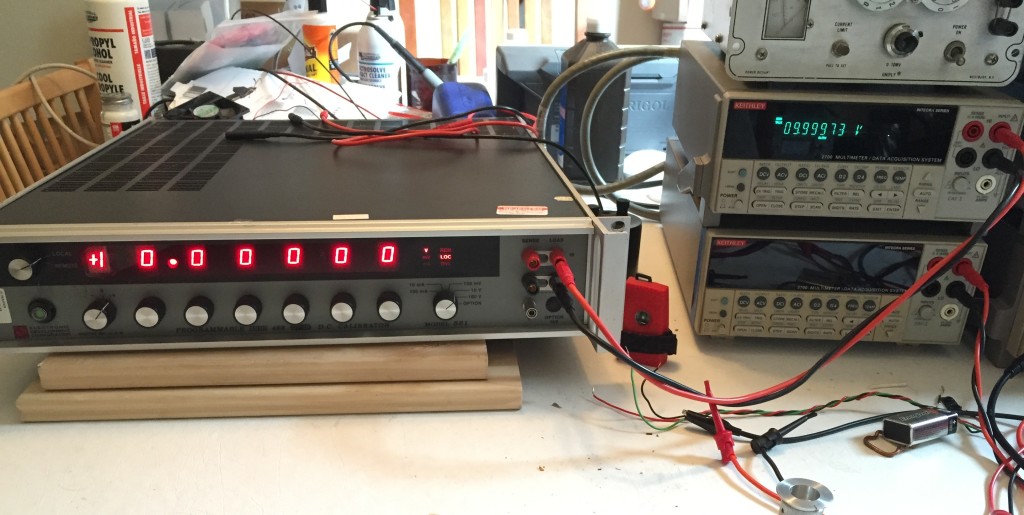

I powered it up, and gave it a quick check on all the voltage and current ranges. It seems pretty close to its 1 year tolerances. I was surprised by the amount of time it took to warm up and stabilize, but when I checked the manual, I saw that the warm up time is speced at 2 hours.

I powered it down over night. This morning I set up my computer to voltage readings ever few seconds and then powered it back up. I’ll post a graph once I have a days worth of data. After that, I’m going to write a script to run through all the possible settings and log the measurements. So, more to come!

It is part of a

It is part of a

The seller described the unit as used with responsive controls and indicators. When I received it, I could see that while in generally good physical shape the upper right portion of the front panel was more bent/buckled than I could make out in the eBay photos.

The seller described the unit as used with responsive controls and indicators. When I received it, I could see that while in generally good physical shape the upper right portion of the front panel was more bent/buckled than I could make out in the eBay photos.